Leslie Fisher recently posted a link to a text compactor. She described the site as a way to simplify text.

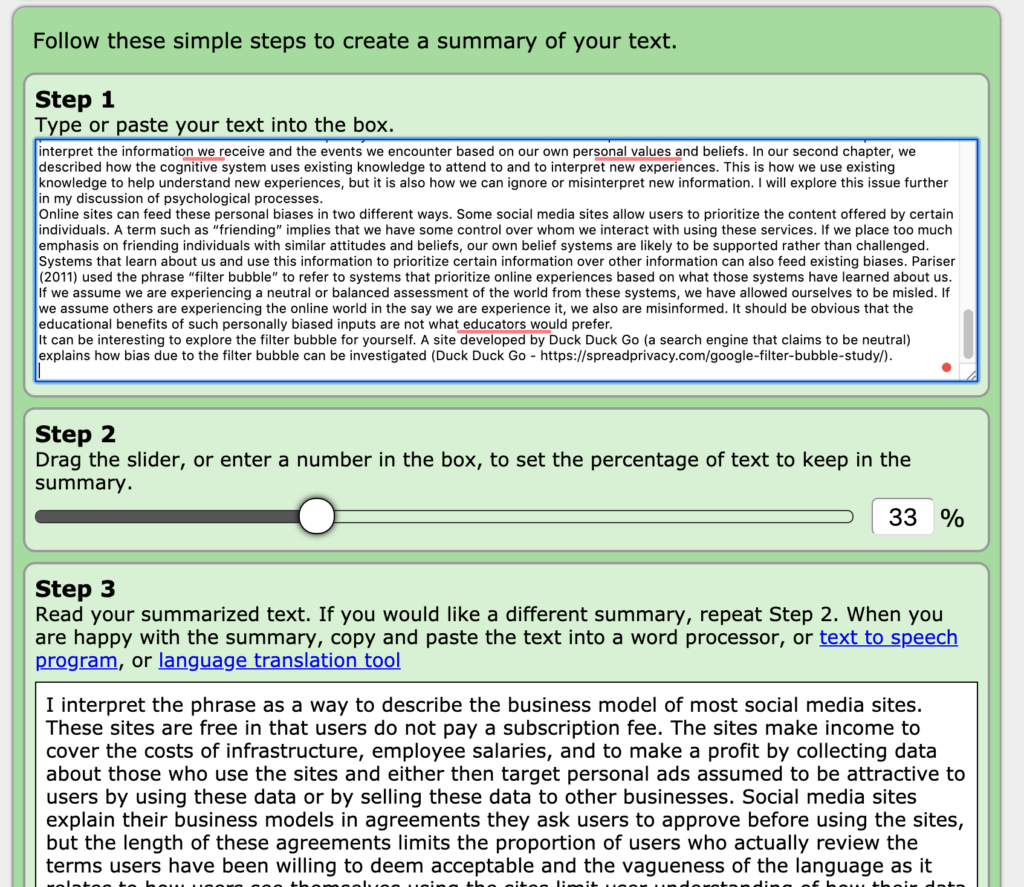

I just completed writing a piece on the causes of adolescent screen time so I thought I would give it a try. I first attempted to have the compactor reduce my 4500-word project and that proved to be beyond the capacity of the site. I recently posted a section of this same project that focused on surveillance capitalism which was 1100 or so words long as a blog post so I tried this shorter piece. At the bottom of this post, the summary service generated is included (reduced to 33% of the original length).

Shortened summary.

I interpret the phrase as a way to describe the business model of most social media sites. These sites are free in that users do not pay a subscription fee. The sites make income to cover the costs of infrastructure, employee salaries, and to make a profit by collecting data about those who use the sites and either then target personal ads assumed to be attractive to users by using these data or by selling these data to other businesses. Social media sites explain their business models in agreements they ask users to approve before using the sites, but the length of these agreements limits the proportion of users who actually review the terms users have been willing to deem acceptable and the vagueness of the language as it relates to how users see themselves using the sites limit user understanding of how their data are being used. Zuboff, 2019).

Much of the negative connotation associated with surveillance capitalism is based on the techniques some online companies use to collect user information to improve the appeal of their ads and to increase of the value of personal information these companies can sell as a resource.

To collect as much and as varied data as are possible, sites seek to encourage heavy and exclusive use. Put another way, to generate a business advantage, sites are motivated to use strategies that generate heavy use. Content that offers these stimuli, whether prioritized by online services or highlighted by any of us as content creators or at least by any of us who share such content, attracts viewers and increases the collection of information about these users that is a byproduct of their online attention. I will explore this issue further in my discussion of psychological processes.

Online sites can feed these personal biases in two different ways. Some social media sites allow users to prioritize the content offered by certain individuals. Systems that learn about us and use this information to prioritize certain information over other information can also feed existing biases. A site developed by Duck Duck Go (a search engine that claims to be neutral) explains how bias due to the filter bubble can be investigated (Duck Duck Go – https://spreadprivacy.com/google-filter-bubble-study/).

You must be logged in to post a comment.